Since the beginning, Puzzled Pint has logged team standings each month — a table of which teams attended, solve times, and so on. It was a fun idea in the beginning, but as Puzzled Pint matures, there has been friction about the published standings. Internally, we use them as a rough gauge of how hard we thought the month’s puzzles were vs. reality. But publishing them on the site is extra work and their value has recently been called into question.

From a player’s point of view, they are entirely artificial. “Solve time” means very little when local Game Control freely gives out hints. It’s also highly variable based on play style. Some cities eat dinner first and then jump into puzzles undistracted. Some solve puzzles more casually over food and drink. A few larger teams split the packet up, solving puzzles in parallel. Most teams focus on one puzzle at a time so that all players can savor the a-ha moments.

From Puzzled Pint Headquarters’ point of view, the public standings page is extra work and additional moving parts when producing events each month. For legacy reasons, most of the standings are manual operations, split between local GC and HQ. This was fine with one or two cities, but hasn’t scaled up well. HQ must wait until all the local city GC have entered their data, then an HQ volunteer finds time to clean up and normalize the entries for public consumption, copying between spreadsheets. We like to focus on finding authors, playtesting puzzles, helping with feedback and editing, onboarding new cities, and running events. Compared to the other responsibilities, updating standings often feels like busywork.

There’s also the philosophical angle in which Puzzled Pint is meant to be a beginner-friendly event. Some see standings as fostering a competitive environment by keeping score and highlighting the more experienced teams. Our Puzzled Pint Charter specifically states that we’re non-competitive with no prizes and no scoring. Are standings a form of score?

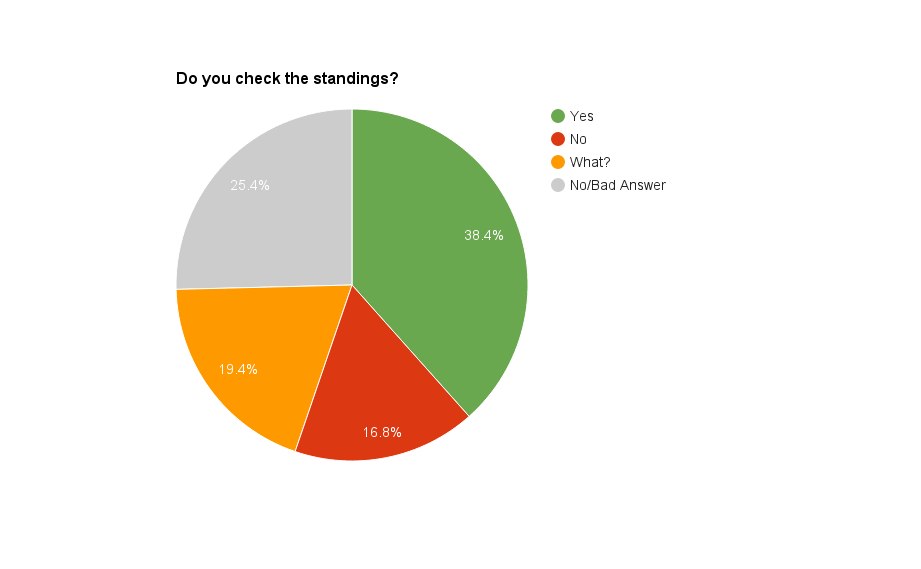

With that in mind, we presented teams with a related Question of the Month (QotM) in January: “After the event, do you check the standings?” with the following results:

| Answer | Team Count | Percent |

| Yes | 121 | 38.4% |

| No | 53 | 16.8% |

| What standings? | 61 | 19.4% |

| No answer / bad answer | 80 | 25.4% |

The majority of answers in that last row were from teams not filling out the question. A few were from teams checking multiple conflicting answers, effectively throwing out simultaneous Yes and No answers (there were only a few). Teams that checked both No and What were counted as What.

Plotting the raw percentages gives us this chart:

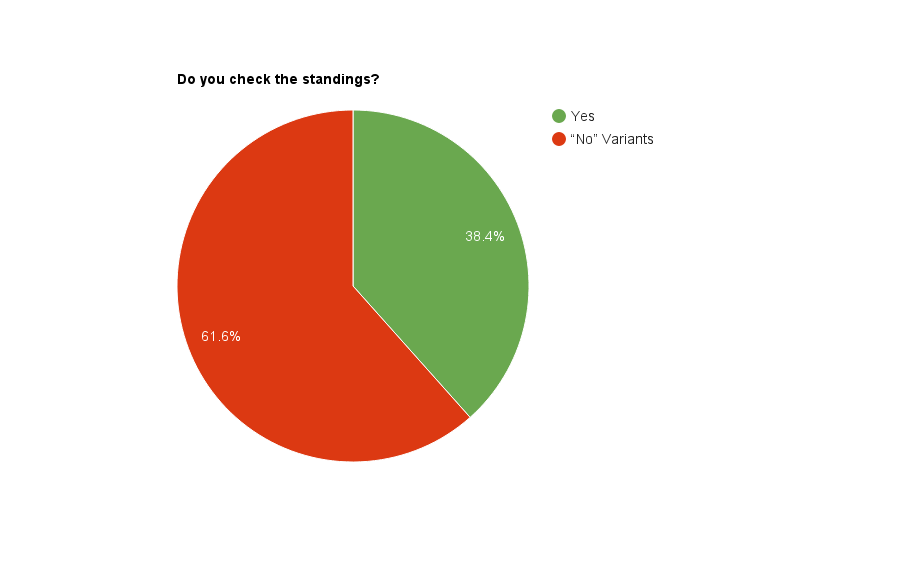

We can probably lump together the No and most of the What answers. There’s a chance that a few Whats might convert to Yeses after becoming aware of their existence, but it’s likely only a small number of teams. The non-answers could go either way, but my experience in hearing from teams, local GC, and Twitter comments is that the folks that love standings are very vocal about loving the standings (and about getting them posted in a timely manner). The people that don’t care or outright don’t like them tend to be a little more apathetic or quiet. Given this, I could make a broad sweeping assumption that the Yes folks all said yes and that the non-answer folks are probably in the No or What category:

But do remember that this makes some sweeping assumptions that may not be entirely valid.

Looking at city-by-city data, the locations in which the Yes crew took the majority are: Austin, Chicago, Detroit, London, Phoenix, Eastside Seattle, and Washington DC.

So what does this all mean? It means we have better visibility into who likes standings. In the short term, probably not much will change. The person that has been responsible for updating standings is stepping away from them — we’re looking for a new volunteer, preferably a local GC member from a Puzzled Pint city. In the long term, we have to decide if we’re going to spend the time and effort to better automate the process. Or decide that what standings has become is now counter to the tenets of Puzzled Pint.

I enjoy checking my team’s results in the standings, but it sounds like consolidating them all into a single webpage is a lot of work for you. (And, as you say, it doesn’t add much value, because the numbers aren’t totally comparable between cities.)

It seems like a good solution would be to give each local Game Control access to their own city’s spreadsheet, and let each one do the update on their own time.

That removes overhead cost from headquarters, and it also could let some cities publish their results faster than they currently do.